Not what you think: The onward rise of AI impersonation scams

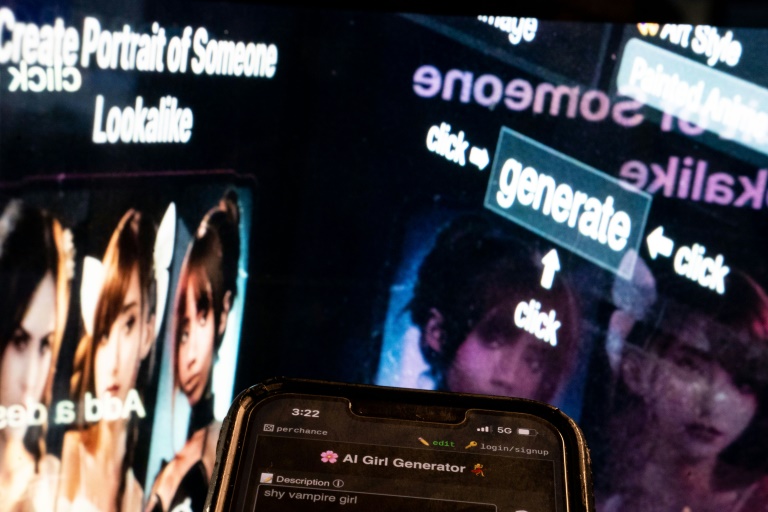

Anyone with a smartphone and specialized software can create the harmful deepfake images – Copyright AFP Mark RALSTON

AI impersonation scams are set to rise further into 2025 according to fraud investigation experts. Last year, such criminal activity was reported to be the third fastest growing scam of 2024.

In response to this, the firm AIPRM has provided advice on how to detect an AI impersonation scam, and how to stay safe.

Most Searched AI Scam Terms Over The Past 12 Months

1 Deepfakes 178,000

2 AI voice cloning 23,000

3 AI deep fakes 2,400

4 AI scams 1,800

5 AI phishing 500

6 AI cloning 400

AIPRM have found that “deepfakes” have been searched for 178,000 times on average each month. This comes as no surprise, as deepfakes continue to grow in dominance, with a 2137 percent rise in deepfake scam attempts over the last three years alone.

“AI voice cloning” has also been gaining traction, with 23,000 monthly searches on average. Being among the fastest growing scams of 2024, and with 70 percent of adults not confident that they could identify the cloned version from the real thing.

In terms of advice for tackling these scams:

AI voice scams

Scammers need just three seconds of audio to clone a person’s voice and utilise it for a scam call. With these scams being simple to create and hard to identify, it is useful to keep the following tips in mind.

The caller will typically claim to be a friend, family member, colleague, or someone you know. Ask the caller a question that only they will know the answer to, or, create a secret phrase that only both you and the caller would know. If they cannot answer with the correct response, it is likely a scammer.

If you only hear your friend/loved one’s voice for a brief period of time, it could be a warning sign, as scammers often use the voice clone briefly, knowing that the longer it is used, the higher the risk of the receiver catching on.

If you are called from an unknown number, it can be a strong indication of a scam, as AI voice scams often use unknown numbers to make unsolicited calls. If the caller is claiming to be a company or someone you know, hang up, and dial them back using a known number, either from your contact list or the company’s official website.

AI phishing & text scams

If you receive a suspicious text or email, there’s a chance it could be an AI impersonation scam, so there are some key things to consider before taking action. Check the sender, and verify their phone or email address. If it is unfamiliar to you, it is best to ignore it. Another red flag can be poor spelling and grammar, which can be common in AI-generated messages, as they lack human-like intellect and context.

A major indicator of AI messages is an urgent request, scammers use this method to pressure you into handing over important information. Legitimate organisations do not typically request sensitive information over text.

You should also avoid clicking on any suspicious links in texts. If the website looks familiar, visit it directly online and log in from there.

AI-generated listings

Scammers can utilise AI to craft images, descriptions, and fake content, in order to generate fake listings online and on social media. These are expected to rise in 2025, even more so since Meta has abandoned the use of fact checking on its platforms. These can range from a retail product, rental properties, or even job listings. There are some key factors to look out for if you are doubtful about a listing you see online.

Listings that ask for a payment or deposit are typical of scammers, who tend to use urgency to gain the viewer’s attention. It is best to not take action if you feel pressured by the listing. The listing may also direct you to a different site to make a payment; this scamming tactic could result in financial fraud so it is advised to not enter any details.

A key piece of advice for any listing you see online, is to reach out to the company via trusted processes, whether that is a company website, or contact number.

Not what you think: The onward rise of AI impersonation scams

#onward #rise #impersonation #scams